I recently had cause to test out cert-manager using the Kubernetes Gateway API, but wanted to do this using a local cluster on my laptop, based on kind. I wanted cert-manager to automatically acquire an X.509 certificate on behalf of an application service running in the cluster, using the ACME protocol. This isn’t straightforward to achieve, as:

- The cluster, hosting cert-manager as an ACME client, runs on a laptop on a private network behind a router, using NAT.

- The certificate authority (CA) issuing the X.509 certificate, which provides the ACME server component, needs to present a domain validation challenge to cert-manager, from the internet.

Essentially, the problem is that the cluster is on a private network, but needs to be addressable via a registered domain name, from the internet. How best to achieve this?

Options Link to heading

There are probably a million and one ways to achieve this, all with varying degrees of complexity. We could use SSH reverse tunnelling, or a commercial offering like Cloudflare Tunnel, or one of the myriad of open source tunnelling solutions available. I chose to use frp, “a fast reverse proxy that allows you to expose a local server located behind a NAT or firewall to the internet”. With 82,000 GitHub stars, it seems popular!

Frp Link to heading

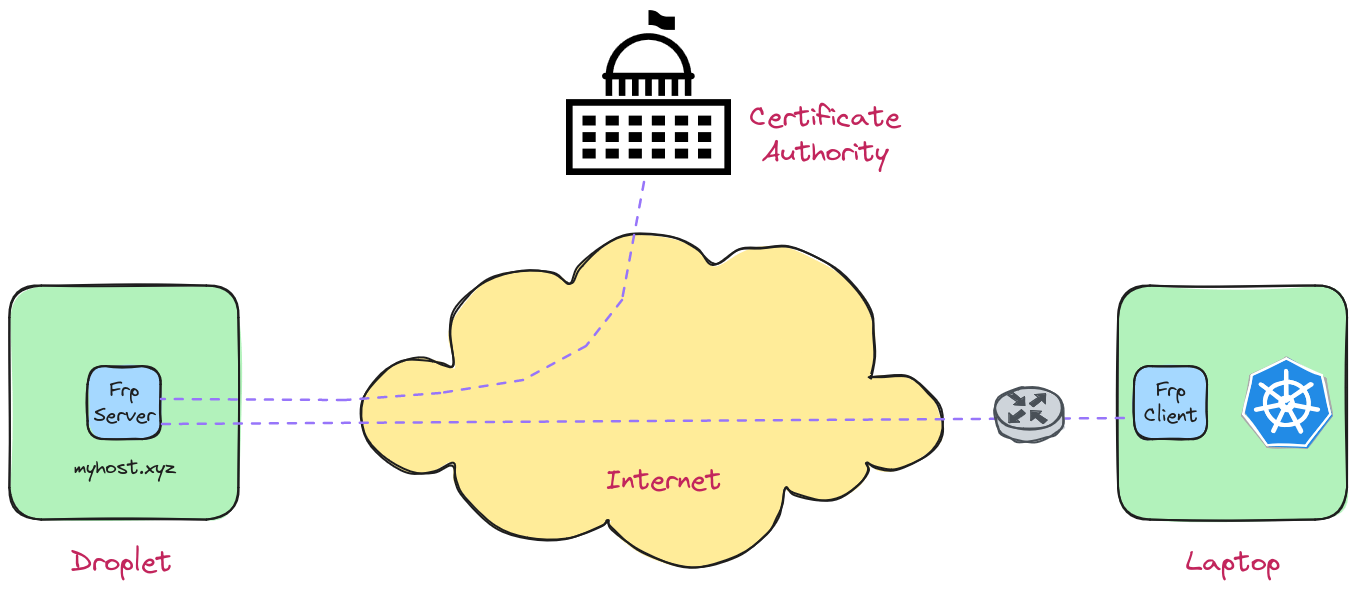

Frp uses a client/server model to establish a connection at either end of a tunnel; the server component at the end that is publicly exposed to the internet, and the client on the private network behind the router. Client traffic arriving at the frp server end is routed to the frp client through the tunnel, according to the configuration provided for the client and server components.

Frp Server Configuration Link to heading

For my scenario, I chose to host the frp server on a DigitalOcean droplet, with a domain name set to resolve to the droplet’s public IP address. This is the domain name that will appear in the certificate’s Subject Alternative Name (SAN). The configuration file for the server looks like this:

# Server configuration file -> /home/frps/frps.toml

# Bind address and port for frp server and client communication

bindAddr = "0.0.0.0"

bindPort = 7000

# Token for authenticating with client

auth.token = "CH6JuHAJFDNoieah"

# Configuration for frp server dashboard (optional)

webServer.addr = "0.0.0.0"

webServer.port = 7500

webServer.user = "admin"

webServer.password = "NGe1EFQ7w0q0smJm"

# Ports for virtual hosts (applications running in Kubernetes)

vhostHTTPPort = 80

vhostHTTPSPort = 443

In this simple scenario, the configuration provides:

- the interfaces and port number through which the frp client interacts with the server

- a token used by the client and the server for authenticating with each other

- access details for the server dashboard that shows active connections

- the ports the server will listen on for virtual host traffic (

80for HTTP and443for HTTPS)

Because this setup is temporary, and to make things relatively easy, the frp server can be run using a container rather than installing the binary to the host:

docker run -d --restart always --name frps \

-p 7000:7000 \

-p 7500:7500 \

-p 80:80 \

-p 443:443 \

-v /home/frps/frps.toml:/etc/frps.toml \

ghcr.io/fatedier/frps:v0.58.1 -c /etc/frps.toml

A container image for the frp server is provided by the maintainer of frp, as a GitHub package. The Dockerfile from which the image is built, can also be found in the repo.

Kind Cluster Link to heading

Before discussing the client setup, let’s just describe how the cluster is configured on the private network.

$ docker network inspect -f "{{json .IPAM.Config}}" kind | jq '.[0]'

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

Kind uses containers as Kubernetes nodes, which communicate using a (virtual) Docker network provisioned for the purpose, called ‘kind’. In this scenario, it uses the local subnet 172.18.0.0/16. Let’s keep this in the forefront of our minds for a moment, but turn to what’s running in the cluster.

$ kubectl -n envoy-gateway-system get pods,deployments,services

NAME READY STATUS RESTARTS AGE

pod/envoy-default-gw-3d45476e-b5474cb59-cdjps 2/2 Running 0 73m

pod/envoy-gateway-7f58b69497-xxjw5 1/1 Running 0 16h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/envoy-default-gw-3d45476e 1/1 1 1 73m

deployment.apps/envoy-gateway 1/1 1 1 16h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/envoy-default-gw-3d45476e LoadBalancer 10.96.160.26 172.18.0.6 80:30610/TCP 73m

service/envoy-gateway ClusterIP 10.96.19.152 <none> 18000/TCP,18001/TCP 16h

service/envoy-gateway-metrics-service ClusterIP 10.96.89.244 <none> 19001/TCP 16h

The target application is running in the default namespace in the cluster, and is exposed using the Envoy Gateway, acting as a gateway controller for Gateway API objects. The Kubernetes Gateway API supersedes the Ingress API. The Envoy Gateway provisions a deployment of Envoy, which proxies HTTP/S requests for the application, using a Service of type LoadbLanacer. The Cloud Provider for Kind is also used to emulate the provisioning of a ‘cloud’ load balancer, which exposes the application beyond the cluster boundary with an IP address. The IP address is 172.18.0.6, and is on the subnet associated with the ‘kind’ network. Remember, this IP address is still inaccessible from the internet, because it’s on the private network, behind the router.

If the frp client can route traffic received from the frp server, for the domain name, to this IP address on the ‘kind’ network, it should be possible to use cert-manager to request an X.509 certificate using the ACME protocol. Further, it’ll enable anonymous, internet-facing clients to consume applications running in the cluster on the private network, too.

Frp Client Configuration Link to heading

Just as the frp server running on the droplet needs a configuration file, so does the frp client running on the laptop.

# Client configuration file -> /home/frpc/frpc.toml

# Address of the frp server (taken from the environment),

# along with its port

serverAddr = "{{ .Envs.FRP_SERVER_ADDR }}"

serverPort = 7000

# Token for authenticating with server

auth.token = "CH6JuHAJFDNoieah"

# Proxy definition for 'https' traffic, with the destination

# IP address taken from the environment

[[proxies]]

name = "https"

type = "https"

localIP = "{{ .Envs.FRP_PROXY_LOCAL_IP }}"

localPort = 443

customDomains = ["myhost.xyz"]

# Proxy definition for 'http' traffic, with the destination

# IP address taken from the environment

[[proxies]]

name = "http"

type = "http"

localIP = "{{ .Envs.FRP_PROXY_LOCAL_IP }}"

localPort = 80

customDomains = ["myhost.xyz"]

The configuration file content is reasonably self-explanatory, but there are a couple of things to point out:

- For flexibility, the IP address of the frp server is configured using an environment variable rather than being hard-coded.

- The file contains proxy definitions for both, HTTP and HTTPS traffic, for the domain

myhost.xyz. The destination IP address for this proxied traffic is also taken from the environment (which evaluates to172.18.0.6in this particular scenario).

As the frp client is getting some of its configuration from the environment, the relevant environment variables need to be set. In this case, the frp server is running on a DigitalOcean droplet, which requires doctl in order to interact with the DigitalOcean API:

export FRP_SERVER_ADDR="$(doctl compute droplet list --tag-name kind-lab --format PublicIPv4 --no-header)"

We know the local target IP address already, but this may be different in subsequent test scenarios, so it’s best to query the cluster to retrieve the IP address and set the variable accordingly:

export FRP_PROXY_LOCAL_IP="$(kubectl get gtw gw -o yaml | yq '.status.addresses.[] | select(.type == "IPAddress") | .value')"

Just as the frp server was deployed as a container, so too can the frp client (Docker image for the client is here, and the Dockerfile here):

docker run -d --restart always --name frpc \

--network kind \

-p 7000:7000 \

-v /home/frpc/frpc.toml:/etc/frpc.toml \

-e FRP_SERVER_ADDR \

-e FRP_PROXY_LOCAL_IP \

ghcr.io/fatedier/frpc:v0.58.1 -c /etc/frpc.toml

The frpc client container is attached to the ‘kind’ network, so that the traffic that it proxies can be routed to the IP address defined in the FRP_PROXY_LOCAL_IP variable; 172.18.0.6. Once deployed, the frp server and client establish a tunnel that proxies HTTP/S requests to the exposed Service in the cluster.

This enables cert-manager to initiate certificate requests for suitably configured Gateway API objects using the ACME protocol. But, it also allows a CA (for example, Let’s Encrypt), to challenge cert-manager with an HTTP-01 or DNS-01 challenge for proof of domain control. In turn, cert-manager is able to respond to the challenge, and then establish a Kubernetes secret with the TLS artifacts (X.509 certificate and private key). The secret can then be used to establish secure TLS-encrypted communication between clients and the target application in the cluster on the private network.

Conclusion Link to heading

Not everyone wants to spin up a cloud-provided Kubernetes cluster for testing purposes; it can get expensive. Local development cluster tools, such as kind, are designed for just such requirements. But, you’ll always need to satisfy that one scenario where you need to access the local cluster from the internet, and sometimes with an addressable domain name. Frp is just one solution available, but it’s a comprehensive solution with a lot more features that haven’t been discussed here. Just to be clear, you should read up on securing the connection between the client and server, to ensure no eavesdropping on the traffic flow.